Originally posted on Marcus on Artificial Intelligence

From a 2016 New York Times article on self-driving cars, it began: Major automakers have poured billions into development, and tech companies have been testing versions of them in American cities. "How it works?

Sorry to break this, and I'm not the only one saying this, but driverless cars (still) have problems. This problem, which I've mentioned dozens of times over the past few years, involves edge states , which are unusual conditions that often confuse machine learning algorithms. The more complex the domain, the more unexpected outliers there will be. And the real world is really complicated and messy. It's impossible to list all the crazy and weird things that can happen. So far, no one has figured out how to build a self-driving car that can deal with this problem.

One of the first times I noticed how difficult end-of-life situations can be was during an interview I did in 2016 when I was tired of the noise and the long conversations. It is strange to read the text today, because today it is more or less true as it was then; Almost all of the words are still used.

All of this apparent progress is fueled by the ability to use brute force techniques on a scale we've never done before. Deep Blue was originally the creator of chess and the Atari game system. This is what drives most people's passion. Meanwhile, when it comes to home robots at home or driving on the street, they can't be scaled to the real world.

… think of self-driving cars. They believe they excel in everyday situations. If you wear them on a clear Palo Alto day, they're great. If you put them in a place with snow, rain, or anything else they haven't seen before, they're going to have a hard time. There was a great article by Stephen Levy on Google's automated car factory where he explained that the big win in late 2015 was that these systems finally recognized letters.

It's great that they recognize leaves, but there are a lot of similar scenarios where there isn't much data, when nothing is very frequent. You and I can reason with common sense. We can try to figure out what it is and how it got there, but systems just remember things. So this is the real limit...

The same can apply to behaviour. Try it in Palo Alto, all drivers are great. If you try it in New York, you will see a completely different driving style. The system may not transition well to the new management style...

You and I can think of the world. When we see a parade, we may not have much information about the parade, but we see a parade and say: - There are a lot of people, let's stop and wait. Maybe the machine gets it, or maybe it's over. A lot of people are confused and don't recognize it because it doesn't quite fit into the separate coils...

In general, the big problem with the whole machine learning approach is that it is based on a training set and test set, where the test set is similar to the training set. Training is basically all the data you have stored, and the test suite is what happens in the real world.

When using machine learning techniques, a lot depends on how similar the test data set is to the training data you've seen before.

Snow and rain are not as big a problem as they used to be, but the extreme events are far from over. The safety level of the self-driving car is still very dependent on the vagaries of the data and the lack of sufficient reasons. (If this doesn't remind you of LLM programs, ignore it.)

§

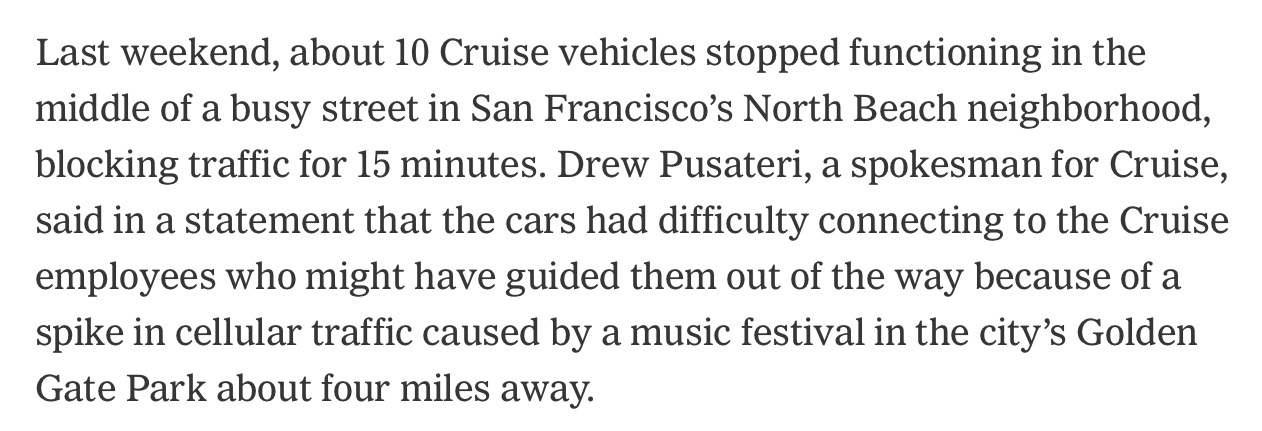

However, the desire to acquire this immature technology is unbreakable. Last week, the California Public Utilities Commission approved Cruise and Waymo, the two largest companies trying to build self-driving cars, to a 24-hour San Francisco rally, giving the two companies enough time to test their cars.

A few hours later, the famous tech optimist almost declared victory, writing on the X:

Well, no, not really. First, obtaining permission to test drive a vehicle does not mean operating it. It was a (bad) calculated game by some bureaucrats, not a definitive, peer-reviewed scientific consensus.

In fact, we don't have real self-driving cars yet. As Cade Metz explained to me a few months ago on my podcast Man vs. Machine , every "self-driving car" on public roads has either a human providing safety or a human driving the vehicle remotely to assist. . If problems arise.

And it's not just that every self-driving car still needs a babysitter. That's because they still fight too (which is what the whole Metz episode was about). Naturally, the high rates led to chaos. And it didn't last long. One of my dear colleagues in the field wrote to me on Monday, shortly after the CPUC decision. I'm sure it will be a &)@$ offer.

He was right. And it didn't take long to find out.

§

Actually less than a week. Five days later, the New York Times read one of my own lines about artificial intelligence that got it wrong:

stuck in concrete?? Now a new frontier condition. Funnier than a Tesla crashing into a parked plane.

And no matter how much data these objects generate, there is always something new.

§

Ten other "self-driving" vehicles crashed last week after losing contact with a mission control team and stranded in the middle of a busy street without them;

§

Self-driving cars have all the perks of life: over $100 billion in funding, glamorous press like Taylor Swift (who has made a lot more than that), and now a license to roam, all known problems and established reality notwithstanding. The unknown unknown never seems to end.

Honestly, I don't know what the California Public Utilities Commission was thinking. None of the independent scientists I know who deal with these matters support this idea.

And it was foolish to go everywhere without a serious and thoughtful solution to the border problem. It was literally an accident (or series of accidents) waiting to happen.

We hope you learned some lessons.

§

And not just for self-driving cars, but for important machine learning software in general.

Extreme cases are everywhere. They drive cars, they do medicine, and if we used them, there would be millions of humanoid robots. Whoever thinks that everything will be easy is wrong.

In another world that's less about money than about wanting to create an AI you can trust, we can pause and ask a very specific question . Extremism that permeates our real and chaotic world . And if not, shouldn't we stop putting a square peg in a round hole and focus on developing new methods for dealing with an infinite variety of terminal states?

If we don't, the next few years will likely see a return of what we see now: self-driving cars, robot doctors, robot psychiatrists, universal virtual assistants, home robots, and much more.

§

In conclusion, I wrote this article on a plane, namely a 747-400. The 747 is on autopilot for most of the nine-hour flight, but it also has a crew, which is what I love about the people on the tarmac.

I don't trust a self-driving plane, and I don't think a semi-autonomous car has gotten its due yet.

Gary Marcus is the co-founder and CEO of ca-tai.org, and he spoke before the US Senate Judiciary Subcommittee on AI Oversight in May about many (but not all) AI risks. To learn more about self-driving cars, see Episode 2 of the eight-part podcast, Humans vs. Machines.

There are no messages

Post a Comment